Artificial Intelligence

Practical Artificial Intelligence

There’s an old saying in computer science circles that when we have no idea how to make a piece of software do something smart we call it “Artificial Intelligence” but once it’s solved we look back with 20-20 hindsight and say it was “Software Engineering”. A computer becoming the world chess champion is the quintessential example of this. Once considered a holy grail of AI, by the time Deep Blue actually dethroned Kasparov, the computing world yawned, “Oh it was just brute force computing power, nothing truly intelligent is really happening”.

Beating the world champions at Jeopardy was slightly more interesting because we acknowledge the vast range of knowledge and language understanding involved. But ultimately, since Jeopardy is just a game, we are left with the feeling, “so what?” How does this affect my life one way or another? Enter, Siri, the voice recognition system integrated into the new iPhone 4S.

When I heard about the feature and saw what it claimed to do, …

The AI-Box Experiment

Several years ago I became aware of Eliezer Yudkowsky’s “AI-Box Experiment” in which he plays the role of a transhuman “artificial intelligence” and attempts (via dialogue only) to convince a human “gatekeeper” to let him out of a box in which he is being contained (resumably so the AI doesn’t harm humanity). Yudkowsky ran this experiment twice and both times he convinced the gatekeeper to let the AI out of the box, despite the fact that the gatekeeper swore up and down that there was no way to persuade him to do so.

I have to admit I think this is one of the most fascinating social experiments ever conceived, and I’m dying to play the game as gatekeeper. The problem though that I realize after reading Yudkowsky’s writeup is that there are (at least) two preconditions which I don’t meet:

Currently, my policy is that I only run the test with people who are actually advocating that an AI Box be used …

Convergence

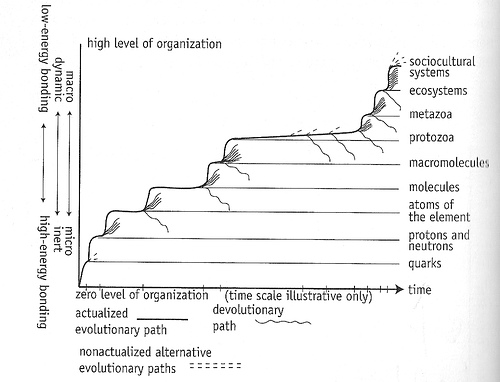

As readers of my blog posts know, I talk a lot about evolutionary systems, the formal structure of cooperation, the role of both in emergence of new levels of complexity, and I sometimes use cellular automata to make points about all these things and the reification of useful models (here’s a summary of how they all relate). I’ve also touched on this “thing” going on with the system of life on Earth that is related to technological singularity but really is the emergence or (or convergence) of an entirely new form of intelligence/life/collective consciousness/cultural agency, above the level of human existence.

From The Chaos Point. Reproduced with permission from the author.

In a convergence of a different sort, many of these threads which all come together and interrelate in my own mind, came together in various conversations and talks within the last 15 hours. And while it’s impossible to explain this all in details, it’s really exciting to find other people who are on …

Superfoo

Response to Superorganism as Terminology.

I was actually about to post something about terminology, so I’m glad this came up. It’s just so difficult to choose words to describe concepts that have little precedent, without going to the extreme of overloading on the one end (e.g. “organism”) or the other extreme of being totally meaningless (e.g. “foo”). I have tried to use terms that are the closest in meaning to what I’m after but there’s no avoiding the misinterpretation. I can only hope by defining and redefining to an audience that is not quick to make snap judgments but rather considers the word usage in context, we can converge to at least a common understanding of what I am claiming. From there at least we have a shot at real communication of ideas and hopefully even agreement.…

Embodied Cognition

Until recently, Artificial Intelligence research has been grounded on a theory of cognition that is based on symbolic reasoning. That is, somewhere in our heads the concepts are represented symbolically and reasoned about via deduction and induction.

At long last, AI researchers are truly learning from human cognition (oh the irony!) Introducing Leo, the robot that learns to model and reason about the world like human babies do, via embodied experience and social interactions:

…