Agency

Convergence

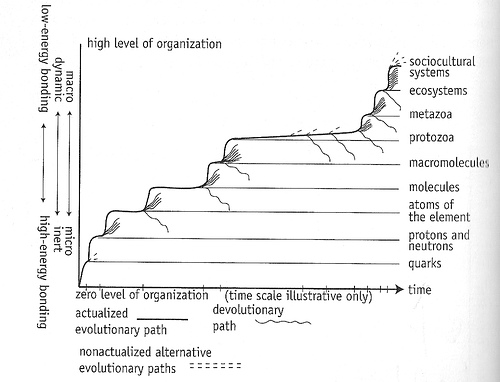

As readers of my blog posts know, I talk a lot about evolutionary systems, the formal structure of cooperation, the role of both in emergence of new levels of complexity, and I sometimes use cellular automata to make points about all these things and the reification of useful models (here’s a summary of how they all relate). I’ve also touched on this “thing” going on with the system of life on Earth that is related to technological singularity but really is the emergence or (or convergence) of an entirely new form of intelligence/life/collective consciousness/cultural agency, above the level of human existence.

From The Chaos Point. Reproduced with permission from the author.

In a convergence of a different sort, many of these threads which all come together and interrelate in my own mind, came together in various conversations and talks within the last 15 hours. And while it’s impossible to explain this all in details, it’s really exciting to find other people who are on …

Comments on Human Cultural Transformation

This is a followup to Ben’s post on Human Cultural Transformation Triggered by Dense Populations. Too many links for this to be accepted into the comments directly…

In thinking about these questions, it helps me to remind myself of the difference between evolution and emergence. Evolution happens whenever you have a population of agents with heritable variation and differential reproduction rates. There are at least two types of emergence, both of which can create new types of agents. Various self-reinforcing mechanisms lead to stronger and more stable agency. We may not even recognize the emergence of nascent agents for what they are until said agency (or coherence) becomes strong enough. For instance, many people have a hard time wrapping their head around cultural agency of any form.

Obviously none of us on here have a problem with the concept of non-human agency, but as Alex and Ben collectively point out, cultural agents depend on human agents for their very existence. Yet …

“Bad people do bad things”

In listening to this account of Hemant Lakhani, convicted in 2005 of illegal arms dealing, I was reminded of another This American Life episode about Brandon Darby. Underlying both stories are accounts of seemingly incompetent, misguided, would-be bad guys who were actualized on a path of evildoing by law-enforcement agents during sting operations.

What I found most interesting was the quote in the title of this post, said by the prosecutor in the Lakhani case. This was his justification for why it was okay to have the U.S. military supply Lakhani the weapon that he was convicted of illegally dealing. (If you listen to the story you will learn that Lakhani had been making promises to the informant of being able to procure weapons for a long time and he’d been unsuccessful on his own).

While it seems on the surface that “bad people do bad things” — i.e. that’s how bad things get done, they require a bad person to do them — …

Stability Through Instability

A friend pointed me to a doubly prescient talk given by George Soros in 1994 about his theory of reflexivity in the markets. Essentially Soros notes that there’s feedback in terms of what agents believe about the market and how the market behaves. Not groundbreaking, but he takes this thinking to some logical conclusions which are in contrast to standard economic theory:…

The Vanguard of Science: Bonnie Bassler

The import of this talk goes way beyond the specific and stunning work that Bassler and her team have done on quorum sensing. In my mind, this is the prototype for good biological science:…

Superfoo

Response to Superorganism as Terminology.

I was actually about to post something about terminology, so I’m glad this came up. It’s just so difficult to choose words to describe concepts that have little precedent, without going to the extreme of overloading on the one end (e.g. “organism”) or the other extreme of being totally meaningless (e.g. “foo”). I have tried to use terms that are the closest in meaning to what I’m after but there’s no avoiding the misinterpretation. I can only hope by defining and redefining to an audience that is not quick to make snap judgments but rather considers the word usage in context, we can converge to at least a common understanding of what I am claiming. From there at least we have a shot at real communication of ideas and hopefully even agreement.…

Response to "Superorganism Considered Harmful"

This is a response to Kevin’s post responding to my post.

…Rafe makes an analogy to cells within a multicellular organism. How does this support the assertion that there will only be one superorganism and that we will need to subjugate our needs to its own? Obviously, there are many multicellular organisms. Certainly, there are many single-celled organisms that exist outside of multicelluar control today. So where is the evidence that there will be only one and that people won’t be able to opt out in a meaningful sense?

Superorganism and Singularity

There is an aspect to The Singularity which is not discussed much, an orthogonal dimension that is already taking shape, and which is perhaps more significant than what is implied by the “standard definition”:

…The Singularity represents an “event horizon” in the predictability of human technological development past which present models of the future may cease to give reliable answers, following the creation of strong AI or the enhancement of human intelligence. (Definition taken from The Singularity Summit website)

Autocatalytic Systems

The above is a self-replicating dynamic structure from a class of systems called cellular automata (click here to run the simulation). Below is a self-replicating dynamic structure from a class of systems called “life”:…

Complex Systems Concept Summary

I figured it was time for a reset and so the following is a summary of much of the foundational posting that I’ve done on this blog so far. As always, a work in progress, subject to refinement and learning……

TED Talk: Susan Blackmore

Memes and “temes”

Apropos of Kevin’s post yesterday on the “Singularity“, we need to be taking more seriously cultural agency (which includes technological and socio-technological agency):…

Complex Systems Defend Themselves

I’ve talked on here about the importance of taking seriously the notion of agency as it applies to systems other than biological. In reading a recent Wired retrospective on what they called wrong, I was struck by feeling that their error was the same in all three cases, and that is underestimating the degree to which complex systems will defend themselves in the face of attack as if they were living, breathing organisms.…

Coherence

I posted earlier on emergent causality. One aspect that needs to be elaborated on is the concurrent, self-interdependent nature of emergence, or in other words the chicken and egg problem.…

Three Kinds of Cooperation

Ecologists speak about two types of cooperation — mutualism and commensalism — which distinguish whether both or just one of a pair is benefiting. I’d like to look at a different dimension of cooperation that has to do with communication. There are at least three different types of cooperation along this dimension, though perhaps you can distinguish more (if so, please post a comment!)…

Genotype, Phenotype, Schmenotype

The distinction between “genotype” and “phenotype” is an artificial one that obfuscates understanding past a certain point. As Dawkins points out in his selfish gene argument, from the standpoint of the gene, the gene is the phenotype and the organism is the genotype. This is not to say that we should go overboard and anoint the gene as supreme. “Genotype” and “phenotype” are concepts. From a complex system’s standpoint, they are two frozen snapshots (stages) in an ongoing autocatalytic cycle. Other stages between could be singled out and studied (e.g. ontogenesis), but we are not good at conceptualizing dynamic processes and prefer to look at relatively stable forms. We forget that these stable forms are a part of the autocatalytic process, which is ongoing.…

The Logical Necessity of Group Selection

There has been a long-standing debate about the notion of group selection, the idea that populations of organisms can be selected for en masse over competing populations. The Darwinian “purists” claim that natural selection (NS) only acts at the level of individuals. But if that’s true, then how can multicellular organisms be subject to NS? After all what are multicellular organisms if not a group of single cell organisms?…

Types of Emergence

Stability can be thought of as a measure of agency. That is, the more stable a system is, the better we are able to recognize it as a distinct agent, a system that actively, structurally or by happenstance persists through time, space and/or other dimensions. Burton Voorhees defines a concept of virtual stability as a “state in which a system employs self-monitoring and adaptive control to maintain itself in a configuration that would otherwise be unstable.” He clarifies that virtual stability is not the same as stability or metastability and gives formal definitions of all three.* By making a distinction between stability, metastability and virtual stability, we can gain further clarity on agency itself and the emergence of new agents and new levels of organization.…

Dangerous Ideas

Daniel Horowitz just forwarded me an interesting article in which Steve Pinker is debating and defending the merits of exploring dangerous ideas even though they may threaten our core values and deeply offend our sensibilities. What struck me most interesting (and laudable) was Pinker’s willingness to play devil’s advocate to his own argument and suggest that maybe exploring dangerous ideas is too dangerous an idea itself and thus should not be adopted as a practice:

…But don’t the demands of rationality always compel us to seek the complete truth? Not necessarily. Rational agents often choose to be ignorant. They may decide not to be in a position where they can receive a threat or be exposed to a sensitive secret. They may choose to avoid being asked an incriminating question, where one answer is damaging, another is dishonest and a failure to answer is grounds for the questioner to assume the worst (hence the Fifth Amendment protection against being forced to testify against oneself). Scientists

Cooperation and Competition

It is well-understood that the primary relationship between agents in an evolutionary system is that of competition for resources: food, mates, territory, control, etc. It is also recognized that agents not only compete but also cooperate with one another, sometimes simultaneously, for instance hunting in packs (cooperation) while also fighting for alpha status within the pack (competition). If we look at inter-agent behaviors as existing on a continuum of pure competition on one end and pure cooperation* on the other, it is clear that there is broad range both within species and between agents of different species. Originally, cooperative behavior was explained away as an exception to the general competitive landscape and happened only when two agents shared enough genetic code (such as between parent and child) that cooperation could be seen as a form of genetic selfishness. While this true in a narrow sense, it misses the larger point which is that cooperation between any two or more agents can confer advantages to all …

Mechanisms of Agency

The following is a non-exhaustive catalog. Note that these mechanisms are in fact emergent properties of the system under study, a fact which has some fairly profound consequences when considering the lowest known levels in physical systems. Read Ervin Laszlo’s chapter, Aspects of Information, in Evolution of Information Processing Systems (EIPS) for more theoretical background.

Stasis

The most trivial form of stability we can think of is an agent existing in the same place over time without change. This may only make sense as you read on, so don’t get caught up here.

Movement

Keeping time in the equation but allowing physical location to vary, we see that agents can move and continue to exist and be recognized as the “same”. This is obvious in the physical world we live in, but consider what is going on with gliders in the Game of Life. The analogy is more than loose since cellular automata are network topologies which mirror physical space in one or two …

Cultural Agency

Talking about culture from a complex systems standpoint requires a bit of inductive leap of faith as follows. If you buy the argument that agents emerge from agents (and interactions thereof) at lower levels, then it is clear that there is some level of agency above individual humans.* What we call this level varies according to who is telling the story and what the thrust of their thesis is: population, culture, society, memetics, economy, zeitgeist, etc. The reality is that all of these levels (and more) co-exist, and we are talking about interlocking systems at varying “partial levels” with dynamic, and only loosely constrained, information flow. Nonetheless, there are common elements and properties that we can discuss that are at the very least distinct from the realm of an isolated individual human being.

…