Convergence

As readers of my blog posts know, I talk a lot about evolutionary systems, the formal structure of cooperation, the role of both in emergence of new levels of complexity, and I sometimes use cellular automata to make points about all these things and the reification of useful models (here’s a summary of how they all relate). I’ve also touched on this “thing” going on with the system of life on Earth that is related to technological singularity but really is the emergence or (or convergence) of an entirely new form of intelligence/life/collective consciousness/cultural agency, above the level of human existence.

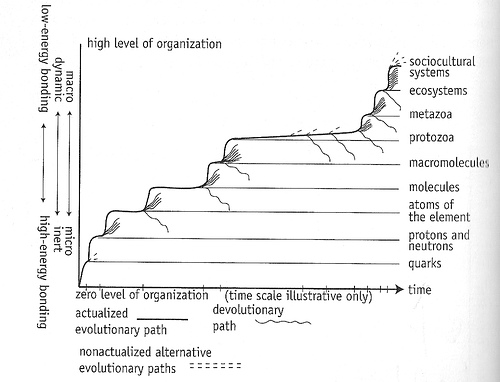

From The Chaos Point. Reproduced with permission from the author.

In a convergence of a different sort, many of these threads which all come together and interrelate in my own mind, came together in various conversations and talks within the last 15 hours. And while it’s impossible to explain this all in details, it’s really exciting to find other people who are on the same wavelength and have thought a lot harder on each of the pieces than I have. Just to give you a taste, here are the human players in this personal convergence and how they relate to the above themes:

Kevin Carpenter: Heard him first talk at LA Idea Project on the concept of Convergence and how it’s critically different than Kurzweilian Singularity and much more similar to Superorganism. Ran into him again at a party last night and he was excited to have given more cogent shape to his thinking in this area.

Steve Omohundro: I went to check out the H+ Summit this morning and he was speaking matter-of-factly on so many areas of interest and dropping research-backed evidence to support all of this pontification. While the details aren’t in this slide presentation, you should glance through it anyway, especially if you have been intrigued at all about things that I’ve written about.

Dan Miler: Spoke right after Omohundro on cellular automata and simulation, and the metaphor/paradigm of digital physics. He highlighted several projects by other people which are shedding light on deep universal structure, including the work of Alex Lamb. Lamb has built the first (as far as I know) cellular automata system based on irregular latices (i.e. arbitrary network structures). Just like in Conway’s Game of Life — the most well-known cellular automaton — there emerge persistent dynamic patterns similar to gliders:

Here are more examples from the Jellyfish system.

What intrigues me most about this is that the brain is a nonregular lattice (by definition all networks are). Neuronal firing patterns are (that is to say, cognition is) computationally isomorphic to cellular automata on nonregular lattices. The jellyfish patterns seen in Lamb’s simulations are exactly what I would imagine to exist in the brain. These would be the semi-autonomous interacting — sometimes cooperating, sometimes conflicting — agents that Omohundro refers to as being the basis of all cognition/intelligence. It’s exactly what Minsky was referring to in Society of Mind, and what Palombo referred to in The Emergent Ego. It’s also the basis of crowd wisdom or collective intelligence.

Which leads us back to Convergence. As we learn more about the nature of cognition, intelligence and thought (both conscious and unconscious), I believe we will recognize ever more clearly how there is new sentience emerging, not alongside human beings (though that is surely happening as well), but rather at the level above human beings and their technological spawn.

-

Alex Golubev

-

DWCrmcm