Singularity

Scientific Singularity?

A couple of weeks ago Kevin and I went around on the topic of whether or not science is “broken”. We came to the point of agreeing that we have different basic assumptions of what constitutes “utility”. And because of this, while we could agree that each of our arguments made sense logically, we ultimately end up with opposite conclusions. After all, for something to be broken it means that it once served a purpose that it no longer is able to serve due to mechanical/structural failure. And to have a purpose means that it has value (i.e. utility) to someone.

So whether science is broken or still works depends your definition of utility. Kevin and I agreed on a measurement for scientific utility, based on (a) how well it explains observed phenomena, (b) how well it predicts new phenomena, and (c) how directly it leads to creation of technologies that improve human lives. We can call it “explanatory power” or EP for short. …

The AI-Box Experiment

Several years ago I became aware of Eliezer Yudkowsky’s “AI-Box Experiment” in which he plays the role of a transhuman “artificial intelligence” and attempts (via dialogue only) to convince a human “gatekeeper” to let him out of a box in which he is being contained (resumably so the AI doesn’t harm humanity). Yudkowsky ran this experiment twice and both times he convinced the gatekeeper to let the AI out of the box, despite the fact that the gatekeeper swore up and down that there was no way to persuade him to do so.

I have to admit I think this is one of the most fascinating social experiments ever conceived, and I’m dying to play the game as gatekeeper. The problem though that I realize after reading Yudkowsky’s writeup is that there are (at least) two preconditions which I don’t meet:

Currently, my policy is that I only run the test with people who are actually advocating that an AI Box be used …

The New Scientific Enlightenment

There is a massive paradigm shift occurring: beliefs about the nature of scientific inquiry that have held for hundreds of years are being questioned.

As laypeople, we see the symptoms all around us: climatology, economics, medicine, even fundamental physics; these domains (and more) have all become battlegrounds with mounting armies of Ph.D.s and Nobel Prize winners entrenching in opposing camps. Here’s what’s at stake:

. . .

Scientific Objectivity

In 1972 Kahneman and Tversky launched the study into human cognitive bias, which later won Kahneman the Nobel. Even a cursory reading of this now vast literature should make each and every logically-minded scientist very skeptical of their own work.

A few scientists do take bias seriously (c.f. Overcoming Bias and Less Wrong). Yet, nearly 40 years later, it might be fair to say that its impact on science as a whole has been limited to improving clinical trials and spawning behavioral economics.

In 2008, Farhad Manjoo poignantly illustrates …

The Technium

Here are the slides from his talk. My favorites are 3, 4, 8, 10, 15, 19, 21, 23, 26, 28, 29, 35, 37, 38, 53, 66, 68.

Convergence

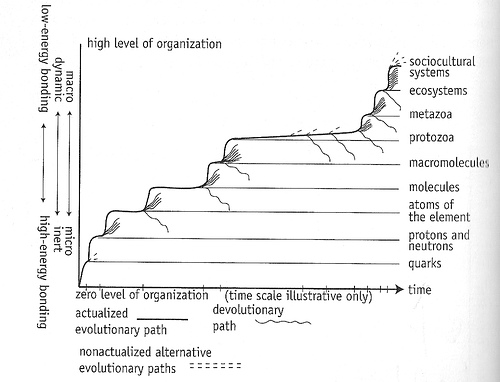

As readers of my blog posts know, I talk a lot about evolutionary systems, the formal structure of cooperation, the role of both in emergence of new levels of complexity, and I sometimes use cellular automata to make points about all these things and the reification of useful models (here’s a summary of how they all relate). I’ve also touched on this “thing” going on with the system of life on Earth that is related to technological singularity but really is the emergence or (or convergence) of an entirely new form of intelligence/life/collective consciousness/cultural agency, above the level of human existence.

From The Chaos Point. Reproduced with permission from the author.

In a convergence of a different sort, many of these threads which all come together and interrelate in my own mind, came together in various conversations and talks within the last 15 hours. And while it’s impossible to explain this all in details, it’s really exciting to find other people who are on …

Homo Evolutis

In Juan Enriquez’ TED talk earlier this year, he made the point that humans have entered a new phase of evolution, one that has not been seen on before modern humans and their technology. This, of course, is one of the main theses of Ray Kurzweil’s book, The Singularity is Near, and the main justification for the creation of The Singularity Institute (plus related Singularity Summit), and now just recently, Singularity University.

Lest you think the concept of Homo Evolutis — a species that can control its own evolutionary path by radically extend healthy human lifespan and ultimately merging with its technology — is a fringe concept share by sci-fi dreamers who don’t have a handle on reality, check out the list of people in charge of Singularity University (link above), the Board members of the Lifeboat Foundation, and throw in Stephen Hawking for good measure, who says, “Humans Have Entered a New Phase of Evolution“. These people not only …

Methuselah Foundation

If, like Aubrey de Grey, you believe that immortality is achievable, or you are just intrigued by the possibility, you should check out this news story on The Methuselah Foundation.

…

Technology Evolution Will Eclipse Financial Crisis

This is a precursor to Singularity sort of argument:…

Superfoo

Response to Superorganism as Terminology.

I was actually about to post something about terminology, so I’m glad this came up. It’s just so difficult to choose words to describe concepts that have little precedent, without going to the extreme of overloading on the one end (e.g. “organism”) or the other extreme of being totally meaningless (e.g. “foo”). I have tried to use terms that are the closest in meaning to what I’m after but there’s no avoiding the misinterpretation. I can only hope by defining and redefining to an audience that is not quick to make snap judgments but rather considers the word usage in context, we can converge to at least a common understanding of what I am claiming. From there at least we have a shot at real communication of ideas and hopefully even agreement.…

Response to "Superorganism Considered Harmful"

This is a response to Kevin’s post responding to my post.

…Rafe makes an analogy to cells within a multicellular organism. How does this support the assertion that there will only be one superorganism and that we will need to subjugate our needs to its own? Obviously, there are many multicellular organisms. Certainly, there are many single-celled organisms that exist outside of multicelluar control today. So where is the evidence that there will be only one and that people won’t be able to opt out in a meaningful sense?

Superorganism and Singularity

There is an aspect to The Singularity which is not discussed much, an orthogonal dimension that is already taking shape, and which is perhaps more significant than what is implied by the “standard definition”:

…The Singularity represents an “event horizon” in the predictability of human technological development past which present models of the future may cease to give reliable answers, following the creation of strong AI or the enhancement of human intelligence. (Definition taken from The Singularity Summit website)

Autocatalytic Systems

The above is a self-replicating dynamic structure from a class of systems called cellular automata (click here to run the simulation). Below is a self-replicating dynamic structure from a class of systems called “life”:…

Hive Mindstein

David Basanta’s blog has an interesting thread (quite a few of them actually). Here’s the setup but you should read the original post, including the Wired article:

…Apparently, some people are seeing some potential in cloud computing not just as an aid to science but as a completely new approach to do it. An article in Wired magazine argues precisely that. With the provocative title of The end of theory, the article concludes that, with plenty of data and clever algorithms (like those developed by Google), it is possible to obtain patterns that could be used to predict outcomes…and all that without the need of scientific models.

Response to "Thoughts on Ants, Altruism and the Future of Humanity"

[ This is an edited version of a blog comment on Brandon Kein’s Wired Science post here ]

The question of whether we will “break through” to a superorganism or collapse through any number of spiraling cascades or catastrophic events is the subject of Ervin Laszlo’s book, The Chaos Point, which I highly recommend. In it, he gives a sweeping view of the complex evolutionary dynamic (focusing on human society), and makes a solid argument that we are at an inflection point in history right now, similar to the “saltation” that begat multicellularity.…

Blog Comment, Cooperation, Culture, Emergence, Levels, Singularity, Society Blog Comment, Cooperation, Culture, Emergence, Levels, Singularity, Society, 2